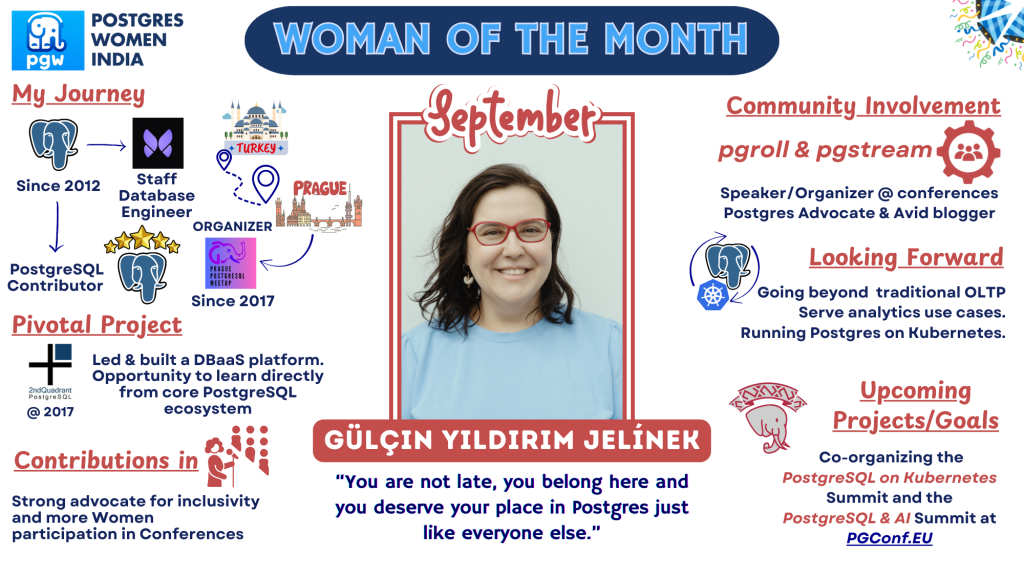

Postgres Woman of the Month September 2025 - Gulcin Yildirim Jelinek

Introduction:

Hello, my name is Gülçin Yıldırım Jelínek. I work at Xata, a modern PostgreSQL platform focused on enabling realistic staging environments with features such as copy-on-write instant branching, data anonymization and zero-downtime schema migrations.

Originally from Turkey, I have been living in Prague for more than eight years. I hold a degree in Applied Mathematics from Yildiz Technical University (Istanbul, Turkey) and a master’s degree in Computer and Systems Engineering from Tallinn Technical University (Tallinn, Estonia). I have been working professionally with databases for about 15 years with a particular focus on PostgreSQL since 2012.

I am a co-founder of Postgres Women, a former member of the PostgreSQL Europe Board of Directors and the main organizer of the Prague PostgreSQL Meetup, which I have been running since 2017. I am also a co-founder and general coordinator of Kadin Yazilimci (Women Developers), a volunteer-based organization dedicated to close the gender gap in IT in Turkey. As part of this initiative, we organize an annual conference called Diva: Dive into AI with the fourth edition planned for 2026 in Istanbul.

I was recently recognized as a PostgreSQL Contributor and am committed to supporting the growth of the Postgres project while contributing to its longevity and success.

Journey in PostgreSQL

I began my career working with databases such as Oracle and DB2, first in the IT department of the largest private hospital in Turkey and later at the country’s largest commercial bank. In 2012, I received an offer from a startup using PostgreSQL to become their DBA, which marked my transition to Postgres, a decision I have never looked back on since.

Can you share a pivotal moment or project in your PostgreSQL career that has been particularly meaningful to you?

I started working at 2ndQuadrant in 2017, a company founded by the late Simon Riggs. There, I had the opportunity to manage customer databases at scale, develop automation for PostgreSQL administration, and lead the effort to build a Database-as-a-Service platform. At that time, this was ahead of its era, only a handful of companies were offering Postgres as a Service. As part of this platform, we delivered Postgres Distributed (formerly known as BDR), supporting not only physical replicas but also multi-master, always-on architectures with integrated backup and restore, elastic scaling, monitoring and optimized PostgreSQL configuration.

I also feel incredibly fortunate to have worked alongside major PostgreSQL contributors at 2ndQuadrant and later at EDB. It was a unique opportunity to learn directly from the people who helped build core PostgreSQL features such as hot standby, streaming replication, logical decoding and extensions like pglogical as well as critical ecosystem projects including PgBouncer.

Contributions and Achievements:

When I first began speaking at PostgreSQL conferences, I was often the only woman present. On rare occasions, there might be one other woman on the schedule, a few in the audience or involved in the organization. I have consistently advocated for inclusivity and I am proud to say that the situation has improved significantly since then. Today, we see more women participating in the Postgres community across Europe and I hope it feels less intimidating for newcomers. I like to think I have had a small impact on this change and I continue to advocate for a stronger, more inclusive community. I consider myself a natural community builder. I help organize conferences, run meetups and speak regularly about Postgres and I am proud of these efforts.

(II) Have you faced any challenges in your work with PostgreSQL, and how did you overcome them?

In the beginning, there were some obstacles, mainly due to language barriers. When working in Turkey and contributing to the Postgres mailing lists, we often spent a great deal of time perfecting our English, trying to write messages that were as open, descriptive and to the point as possible. The lack of PostgreSQL resources in Turkish was also a significant challenge. Today, things are much easier: with the help of LLM-based tools, writing clear emails and translating complex terms into one’s native language has become far more accessible.

Community Involvement:

I engage with the PostgreSQL community in multiple ways: by submitting talk proposals, speaking at and attending conferences and helping to organize conferences and meetups. I also contribute through blog posts and ongoing advocacy for Postgres. In addition, I work on open-source tools such as pgroll and pgstream. Being part of the ecosystem whether through direct feedback after talks or issues raised by users of these tools keeps me closely connected to the community and aware of its needs.

(II) Can you share your experience with mentoring or supporting other women in the PostgreSQL ecosystem?

I am always open to mentoring, and running the Kadin Yazilimci organization provides me with a platform to support other women in tech. My main focus has always been on young students and over the years some of them have even become my colleagues. Seeing this growth is incredibly rewarding and continues to motivate my volunteer work.

Within Postgres Women, we have an unofficial Telegram group where we share news and opportunities with one another. I also make use of funds provided by the companies I have worked with to host gatherings such as breakfasts and dinners for women in Postgres, helping to foster in-person connections whenever possible. I am grateful to the sponsors who make these meetings possible.

Insights and Advice:

You are not late, you belong here, and you deserve your place in Postgres just like everyone else.

(II) Are there any resources (books, courses, forums) you’d recommend to someone looking to deepen their PostgreSQL knowledge?

I recommend following Planet PostgreSQL, a blog aggregator featuring posts from community members which is my go-to source for staying up to date. I am also subscribed to the Postgres Weekly newsletter and track PostgreSQL (and Postgres) mentions on Hacker News and Reddit. While there are many excellent books on Postgres, I would like to highlight a recent one, Decode PostgreSQL: Understanding the World’s Most Powerful Open-Source Database Without Writing Code by Ellyne Phneah.

Looking Forward:

I am interested in how PostgreSQL can support different workloads. For example, how we can make Postgres the default database for AI and agents by improving vector support (currently not supported natively). I’m also curious about how Postgres can go beyond its traditional OLTP role and serve analytics use cases effectively. Another area that excites me is running Postgres on Kubernetes. At Xata, we are using the CNPG operator in our new platform and I often think about ways to make Postgres run even more smoothly in Kubernetes environments. Finally, I believe extension management is still more complex than it should be and there is room for improvements that would make the experience more seamless and comfortable for users.

(II) Do you have any upcoming projects or goals within the PostgreSQL community that you can share?

I am co-organizing the PostgreSQL on Kubernetes Summit and the PostgreSQL & AI Summit at PGConf.EU, both taking place on the first day of the conference, October 21. I am excited to bring together people interested in focused discussions on these important developments in Postgres, to exchange ideas, learn from one another and ultimately share those insights with the wider PostgreSQL project.

Personal Reflection:

It may sound like a cliché, but over the years the Postgres community has come to feel like a second family. I have been fortunate to make great friends, watch our children grow up and see conferences double or even triple in size as PostgreSQL’s popularity has soared. I hope to always remain a part of this community.

(II) How do you balance your professional and personal life, especially in a field that is constantly evolving?

I try to keep my main focus on the projects I work with while also staying informed about developments in the field. I follow discussions that interest me on the mailing lists and given the nature of my work, I also keep track of the features offered by other Postgres providers. We are very much a “Postgres family,” so the Postgres often finds its way into personal conversations with my husband, who is also a major Postgres contributor. Our kid was able to pronounce “Postgres” correctly before she turned three 🙂

Message to the Community:

There are many factors that contribute to a community’s health and longevity. For Postgres, we need diverse voices and backgrounds that bring diverse ideas to the project. PostgreSQL is a proven technology with countless opportunities and expertise in Postgres will never go out of fashion. The best time to start working with Postgres is today!